Last week, Google unveiled Bidirectional Encoder Representations from Transformers, or BERT, which Google VP of search Pandu Nayak calls “the single biggest change we’ve had in the last five years and perhaps one of the biggest since the beginning of the company.”

What is BERT?

In non-rocket science terms, it’s a machine learning algorithm that Google says can help it better understand search queries, which in turn enables the search giant to return more relevant results. According to Nayak, BERT is capable of considering “the full context of a word by looking at the words that come before and after it—particularly useful for understanding the intent behind search queries.”

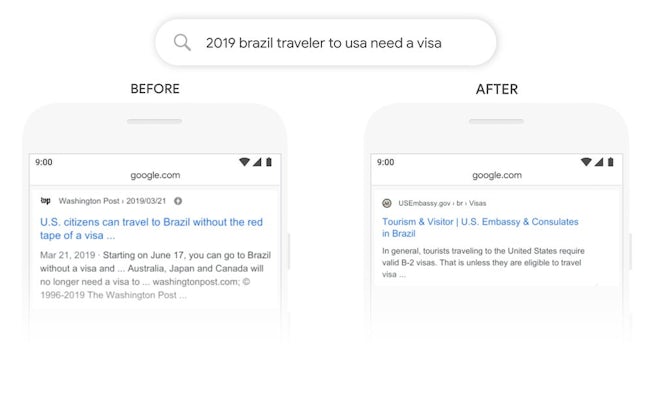

For example, BERT is capable of identifying search queries where prepositions like “for” and “to” are critical to understanding the intention of the query.

Google provided a number of real-world examples showing how BERT can produce search results that are much more relevant, such as the following (see Google’s blog post announcement for more examples).

At launch, BERT is being used for featured snippets globally in all languages and is being applied to English-language searches. Google says the technology will be extended to search queries in other languages in the future. BERT does not replace RankBrain, the machine learning algorithm Google launched in 2015 in an effort to deliver more relevant results. Instead, BERT will work alongside RankBrain, being applied where appropriate.

Google estimates that it will affect 10% of queries, a not insignificant figure, which raises an important question: what does BERT mean for search marketers?

Obviously, BERT is likely to increase traffic to some pages and decrease traffic to others. If the technology works as described, this should be a good thing overall. Pages that see an increase in traffic are likely to be more relevant to the search query, and thus should perform better as they align to the intent of the search. Those that see a decrease in traffic are those that were likely less relevant to the search query and thus probably underperformed.

As for optimizing content for BERT, Search Engine Land’s Barry Schwartz suggests that little can and should be done. He pointed to a tweet posted by Google’s Gary Illyes when RankBrain was launched, which stated “you optimize your content for users and thus for rankbrain. that hasn’t changed.”

Google’s technological advances have sparked a debate about the future of SEO and BERT only adds fuel to the fire. As Google’s natural language chops grow, arguments that SEO as we know it is dead are likely to become more prominent. After all, there’s no reason to optimize content for a search engine when the search engine’s understanding of search queries becomes human-like.

Of course, that doesn’t mean that there is nothing for search marketers to do.

For one, some companies might soon find that as Google’s ability to better understand natural language grows, they have reason to update content that was originally developed for traditional SEO so that it’s better-suited for human consumption.

Most importantly, companies still have to understand what their target audiences are looking for and create compelling content that delivers on that. The former has always been a key part of traditional SEO — keyword research — and will still play a critical role in content strategy even as Google’s evolution means that search marketers won’t have to worry as much about the limitations of its technology.

SEO Best Practice Guide