Search engines need to index your site before it can rank. But it’s not always obvious if the correct pages are indexed. Content that should be indexed may not be. And content that should not be indexed often is.

After evaluating your site’s crawl, indexation is the next step in the technical search engine optimization audit. Use the following six steps to ensure that the right pages are indexed across your ecommerce site.

Number of Pages

How many pages are on your site?

The first step is to determine how many pages from your site should be indexed.

For an approximation, the Pages report in Google Analytics shows all the URLs that have received visitors. Go to the Behavior > Site Content > All Pages and set the desired date range at the top right corner. Then scroll to the bottom right. The number of rows is the pages on your site that have driven at least one view.

Alternatively, add the URLs from all of your XML sitemaps. Make sure, however, that your sitemaps are accurate. For example, many auto-generated XML sitemaps don’t contain product facets, such as the URL for a page selling sweaters with the “black” and “cotton” filters applied.

Indexation Levels

How many pages are indexed?

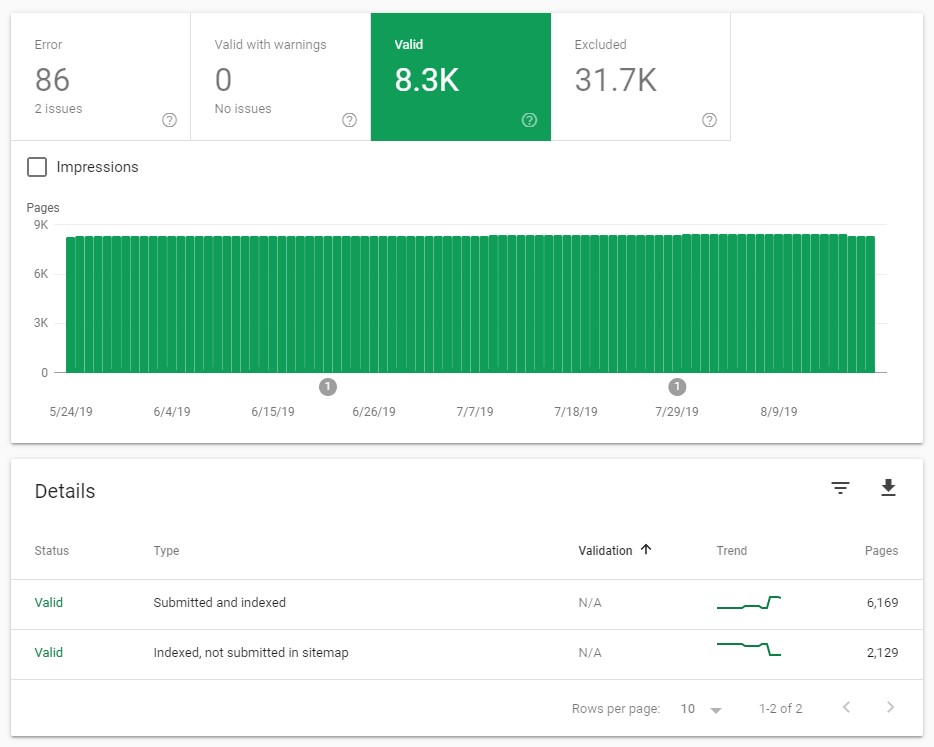

Google Search Console’s Coverage report shows site errors and indexation data.

The Google Search Console Coverage report gives the exact number of indexed and blocked pages as well as crawl errors encountered by Googlebot. It also shows the number of indexed pages in the XML sitemaps, and the number of indexed pages that were not in the XML sitemaps.

Bing Webmaster Tools’ Index Explorer has similar capabilities.

Indexation Value

Are the indexed pages valuable to searchers?

Not all pages are valuable to organic search. Examples include internal search and, perhaps, terms-of-use pages. Both are useful to shoppers on the site, but shouldn’t be indexed to appear in search results.

Product, category, and certain filtered-browse pages have value because they represent phrases that people would search for — such as “black cotton sweaters.”

Analyze your Coverage report to ensure bots can crawl and index the pages that have value, but cannot crawl or index the others. Make sure that valuable pages are listed in the indexation reports and that pages with no value are not.

Duplicate Content

Does your platform generate duplicate content?

When the exact content displays with than one URL, that’s duplicate content. It weakens the link authority of the duplicate pages, creates self-competition for rankings, and impacts crawl equity. Eliminate duplicate content wherever possible.

Ecommerce platforms frequently generate duplicate content. Common culprits include:

Protocol. Your ecommerce site should use a secure HTTPS protocol. If typing the HTTP protocol also loads pages without redirecting to the HTTPS, that’s duplicate content.

Domain. Some businesses host the same site, or close variations, on separate domains. Examples:

- https://domain.com/category/product-abc

- https://domainstore.com/category/product-abc

Subdomain. If you can load the same content at the non-www URL and www subdomain, or any other subdomain, that’s duplicate content. Examples:

- https://domain.com

- https://www.domain.com

Top-level domain. Different TLDs can also host duplicate content. Examples:

- https://domain.com

- https://domain.biz

Click paths. Clicking to the same subcategory or product page via different click paths can result in a different URL and, thus, duplicate content. Examples:

- https://domain.com/category/product-abc

- https://domain.com/category/subcategory/product-abc

Case. Allowing the same content to display regardless of upper- or lowercase letters in the URL can result in duplicate content. Examples:

- https://domain.com/category/product-abc

- https://domain.com/Category/Product-Abc

Duplicate content can compound, potentially creating hundreds of URL variations for a single page of content. For example, a site could use two protocols and two subdomains, which would create four URLs for the same page, such as:

- https://domain.com

- https://www.domain.com

- http://domain.com

- http://www.domain.com

Imagine how many duplicate pages would exist if a site carried all of the common culprits above, plus a few others not mentioned.

Structured Data

Does each page or template contain relevant structured data?

Structured data communicates page relevance and organization to bots. It is not visible to shoppers. Some elements help search engines understand a page. Others, such as rating stars, can enhance listings in search results by generating rich snippets that grab searchers’ attention and increase clicks.

Use Google’s Structured Data Testing Tool to identify and validate structured data. This example is a product page on Walmart.com. Click image to enlarge.

Use Google’s Structured Data Testing Tool to verify. The tool, as shown above, shows the source code for the page on the left and the structured data on the right. Errors and warnings appear in orange. Clicking the green button previews the search results listing if Google used all of the possible rich snippet features.

PDF Optimization

Are PDF files viewable on the site, and downloadable?

The PDF format is common for instruction manuals, reports, product features, and other info. A link for the downloadable PDF is often what appears on a site. That’s necessary, but it inadvertently creates a missed opportunity for sales.

PDF files are indexable, but search engines tend to rank standard web pages higher for a given query. Moreover, a searcher landing on a PDF file has no way to navigate to your site. There’s no obvious link, click-to-call phone number, or next-step form to submit.

The solution is to optimize the PDF in, typically, a dedicated “viewer” page that’s included in your overall site navigation.