Boffins have found a role for AI chatbots where habitual hallucination isn’t necessarily a liability.

They eggheads – based at at the University of Pennsylvania and the University of Maryland in the US – enlisted OpenAI’s large language models (LLMs) to help with fantasy role playing, specifically Dungeons & Dragons (D&D).

In a preprint paper titled “CALYPSO: LLMs as Dungeon Masters’ Assistants,” Andrew Zhu, a UPenn doctoral student; Lara Martin, assistant professor at UMD; Andrew Head, assistant professor at UPenn; and Chris Callison-Burch, associate professor at UPenn, explain how they made use of LLMs to enhance a game that depends highly on human interaction.

D&D first appeared in 1974 as a role-playing game (RPG) in which players assumed the roles of adventuring medieval heroes and acted out those personalities under a storyline directed by a dungeon master (DM) or game master (GM). The prerequisites were a set of rules – published at the time by Tactical Studies Rules – polyhedral dice, pencil, paper, and a shared commitment to interactive storytelling and modest theatrics. Snacks, technically optional, should be assumed.

Alongside such tabletop roleplaying, the proliferation of personal computers in the 1980s led to various computerized versions, both in terms of computer-aided play and entirely electronic simulations – like the recently released Baldur’s Gate 3, to name just one of hundreds of titles inspired by D&D and other RPGs.

The academic gamers from UPenn and UMD set out to see how LLMs could support human DMs, who are responsible for setting the scene where the mutually imagined adventure takes place, for rolling the dice that determine the outcomes of certain actions, for enforcing the rules (which have become rather extensive), and for generally ensuring that the experience is fun and entertaining.

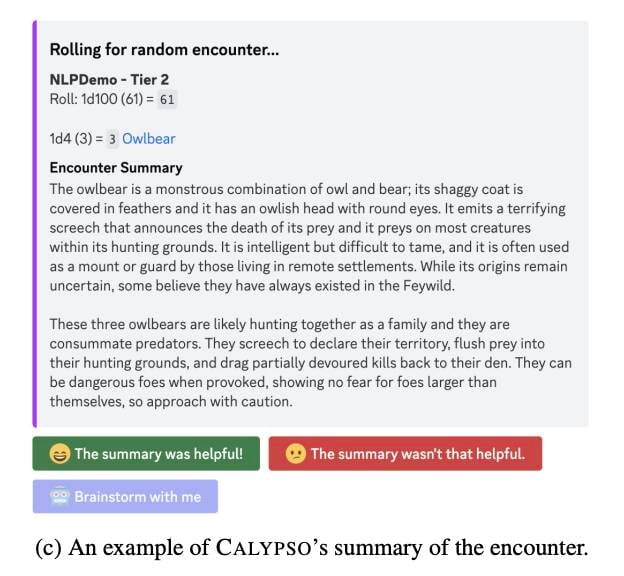

To do so, they created a set of three LLM-powered interfaces, called CALYPSO – which stands for Collaborative Assistant for Lore and Yielding Plot Synthesis Objectives. It’s designed for playing D&D online through Discord, the popular chat service.

“When given access to CALYPSO, DMs reported that it generated high-fidelity text suitable for direct presentation to players, and low-fidelity ideas that the DM could develop further while maintaining their creative agency,” the paper explains. “We see CALYPSO as exemplifying a paradigm of AI-augmented tools that provide synchronous creative assistance within established game worlds, and tabletop gaming more broadly.”

The COVID-19 pandemic shifted some in-person, table-top gaming online, the researchers observe in their paper, and many players who game via Discord do so with Avrae – a Discord bot designed by Andrew Zhu, a UPenn doctoral student and a co-author of the CALYPSO paper.

“The core ideas in the paper (that LLMs are capable of acting as a co-DM in ways that help inspire the human DM without taking over creative control of the game) apply to D&D and other tabletop games regardless of modality. But there are still some challenges to overcome before applying the tech to in-person gaming,” said Zhu in an email to The Register.

Zhu and his colleagues focused on Discord play-by-post (PBP) gaming for several reasons. First, “Discord-based PBP is text-based already, so we don’t have to spend time transcribing speech into text for a LLM,” he explained.

The online setup also allows the DM to view LLM-generated output privately (where “low-fidelity ideas” matter less) and it frees the DM from having to type or dictate into some interface.

CALYPSO, a Discord bot with source code, is described in the paper as having three interfaces: one for generating the setup text describing an encounter (GPT-3); one for focused brainstorming, in which the DM can ask the LLM for questions about an encounter or refining an encounter summary (ChatGPT); and one for open-domain chat, in which players can engage directly with ChatGPT acting as a fantasy creature knowledgeable about D&D.

Setting up these interfaces involved seeding the LLM with specific prompts (detailed in the paper) that explain how the chatbot should respond in each interface role. No specific model training was required to incorporate how D&D works.

“We found that even without training, the GPT series of models knows a lot about D&D from having seen source books and internet discussions in its training data,” said Zhu.

We found that even without training, the GPT series of models knows a lot about D&D from having seen source books and internet discussions

Zhu and his colleagues tested CALYPSO with 71 players and DMs, then surveyed them about the experience. They found the AI helper useful more often than not.

But there was room for improvement. For example, in one encounter, CALYPSO simply paraphrased information in the setting and statistics prompt, which DMs felt didn’t add value.

The Register asked Zhu about whether the tendency of LLMs to “hallucinate” – make things up – was an issue for study participants.

“In a creative context, it becomes a little less meaningful – for example, the D&D reference books don’t contain every detail about every monster, so if an LLM asserts that a certain monster has certain colored fur, does that count as a hallucination?” said Zhu.

“To answer the question directly, yes; the model often ‘makes up’ facts about monsters that aren’t in the source books. Most of these are trivial things that actually help the DM, like how a monster’s call sounds or the shape of a monster’s iris or things like that. Sometimes, less often, it hallucinates more drastic facts, like saying frost salamanders have wings (they don’t).”

Another issue that cropped up was that model training safeguards sometimes interfered with CALYPSO’s ability to discuss issues that would be appropriate in a game of D&D – like race and gameplay.

“For example, the model would sometimes refuse to suggest (fantasy) races, likely due to efforts to reduce the potential for real-world racial bias,” the paper observes. “In another case, the model insists that it is incapable of playing D&D, likely due to efforts to prevent the model from making claims of abilities it does not possess.”

(Yes, we’re sure some of us have been there before, denying any knowledge of RPGs despite years of playing.)

Zhu said it’s clear people don’t want an AI DM but they’re more willing to allow DMs to lean on AI help.

“During our formative studies a common theme was that people didn’t want an autonomous AI DM, for a couple reasons,” he explained. “First, many of the players we interviewed had already played with tools like AI Dungeon, and were familiar with AI’s weaknesses in long-context storytelling. Second, and more importantly, they expressed that having an autonomous AI DM would take away from the spirit of the game; since D&D is a creative storytelling game at heart, having an AI generate that story would feel wrong.

“Having CALYPSO be an optional thing that DMs could choose to use as much or as little as they wanted helped keep the creative ball in the human DM’s court; often what would happen is that CALYPSO would give the DM just enough of a nudge to break them out of a rut of writer’s block or just give them a list of ideas to build off of. Once the human DM felt like they wanted more control over the scene, they could just continue DMing in their own style without using CALYPSO at all.” ®