30-second summary:

- The present reality is that Google presses the button and updates its algorithm, which in turn can update site rankings

- What if we are entering a world where it is less of Google pressing a button and more of the algorithm automatically updating rankings in “real-time”?

- Advisory Board member and Wix’s Head of SEO Branding, Mordy Oberstein shares his data observations and insights

If you’ve been doing SEO even for a short while, chances are you’re familiar with a Google algorithm update. Every so often, whether we like it or not, Google presses the button and updates its algorithm, which in turn can update our rankings. The key phrase here is “presses the button.”

But, what if we are entering a world where it’s less of Google pressing a button and more of the algorithm automatically updating rankings in “real-time”? What would that world look like and who would it benefit?

What do we mean by continuous real-time algorithm updates?

It is obvious that technology is constantly evolving but what needs to be made clear is that this applies to Google’s algorithm as well. As the technology available to Google improves, the search engine can do things like better understand the content and assess websites. However, this technology needs to be interjected into the algorithm. In other words, as new technology becomes available to Google or as the current technology improves (we might refer to this as machine learning “getting smarter”) Google, in order to utilize these advancements, needs to “make them a part” of its algorithms.

Take MUM for example. Google has started to use aspects of MUM in the algorithm. However, (at the time of writing) MUM is not fully implemented. As time goes on and based on Google’s previous announcements, MUM is almost certainly going to be applied to additional algorithmic tasks.

Of course, once Google introduces new technology or has refined its current capabilities it will likely want to reassess rankings. If Google is better at understanding content or assessing site quality, wouldn’t it want to apply these capabilities to the rankings? When it does so, Google “presses the button” and releases an algorithm update.

So, say one of Google’s current machine-learning properties has evolved. It’s taken the input over time and has been refined – it’s “smarter” for lack of a better word. Google may elect to “reintroduce” this refined machine learning property into the algorithm and reassess the pages being ranked accordingly.

These updates are specific and purposeful. Google is “pushing the button.” This is most clearly seen when Google announces something like a core update or product review update or even a spam update.

In fact, perhaps nothing better concretizes what I’ve been saying here than what Google said about its spam updates:

“While Google’s automated systems to detect search spam are constantly operating, we occasionally make notable improvements to how they work…. From time to time, we improve that system to make it better at spotting spam and to help ensure it catches new types of spam.”

In other words, Google was able to develop an improvement to a current machine learning property and released an update so that this improvement could be applied to ranking pages.

If this process is “manual” (to use a crude word), what then would continuous “real-time” updates be? Let’s take Google’s Product Review Updates. Initially released in April of 2021, Google’s Product Review Updates aim at weeding out product review pages that are thin, unhelpful, and (if we’re going to call a spade a spade) exists essentially to earn affiliate revenue.

To do this, Google is using machine learning in a specific way, looking at specific criteria. With each iteration of the update (such as there was in December 2021, March 2022, etc.) these machine learning apparatuses have the opportunity to recalibrate and refine. Meaning, they can be potentially more effective over time as the machine “learns” – which is kind of the point when it comes to machine learning.

What I theorize, at this point, is that as these machine learning properties refine themselves, rank fluctuates accordingly. Meaning, Google allows machine learning properties to “recalibrate” and impact the rankings. Google then reviews and analyzes and sees if the changes are to its liking.

We may know this process as unconfirmed algorithm updates (for the record I am 100% not saying that all unconfirmed updates are as such). It’s why I believe there is such a strong tendency towards rank reversals in between official algorithm updates.

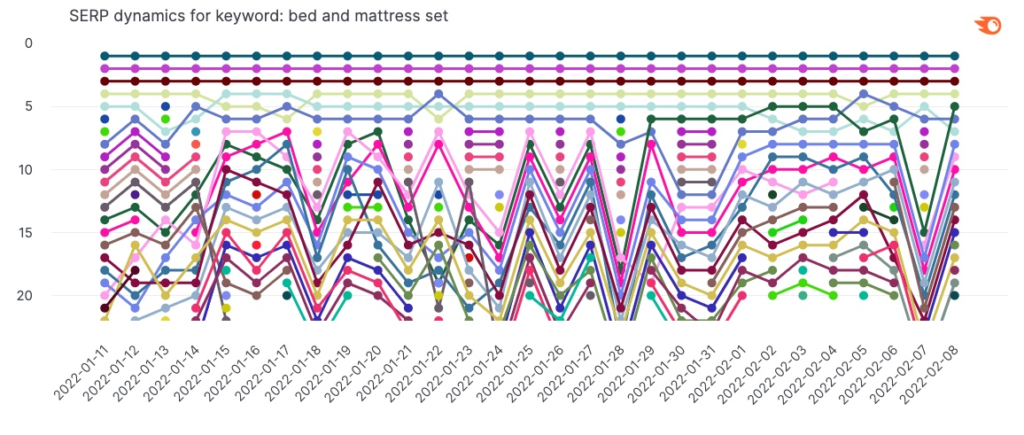

It’s quite common that the SERP will see a noticeable increase in rank fluctuations that can impact a page’s rankings only to see those rankings reverse back to their original position with the next wave of rank fluctuations (whether that be a few days later or weeks later). In fact, this process can repeat itself multiple times. The net effect is a given page seeing rank changes followed by reversals or a series of reversals.

A series of rank reversals impacting almost all pages ranking between position 5 and 20 that align with across-the-board heightened rank fluctuations

This trend, as I see it, is Google allowing its machine learning properties to evolve or recalibrate (or however you’d like to describe it) in real-time. Meaning, no one is pushing a button over at Google but rather the algorithm is adjusting to the continuous “real-time” recalibration of the machine learning properties.

It’s this dynamic that I am referring to when I question if we are heading toward “real-time” or “continuous” algorithmic rank adjustments.

What would a continuous real-time google algorithm mean?

So what? What if Google adopted a continuous real-time model? What would the practical implications be?

In a nutshell, it would mean that rank volatility would be far more of a constant. Instead of waiting for Google to push the button on an algorithm update in order to rank to be significantly impacted as a construct, this would simply be the norm. The algorithm would be constantly evaluating pages/sites “on its own” and making adjustments to rank in more real-time.

Another implication would be a lack of having to wait for the next update for restoration. While not a hard-fast rule, if you are significantly impacted by an official Google update, such as a core update, you generally won’t see rank restoration occur until the release of the next version of the update – whereupon your pages will be evaluated. In a real-time scenario, pages are constantly being evaluated, much the way links are with Penguin 4.0 which was released in 2016. To me, this would be a major change to the current “SERP ecosystem.”

I would even argue that, to an extent, we already have a continuous “real-time” algorithm. In fact, that we at least partially have a real-time Google algorithm is simply fact. As mentioned, In 2016, Google released Penguin 4.0 which removed the need to wait for another version of the update as this specific algorithm evaluates pages on a constant basis.

However, outside of Penguin, what do I mean when I say that, to an extent, we already have a continuous real-time algorithm?

The case for real-time algorithm adjustments

The constant “real-time” rank adjustments that occur in the ecosystem are so significant that they refined the volatility landscape.

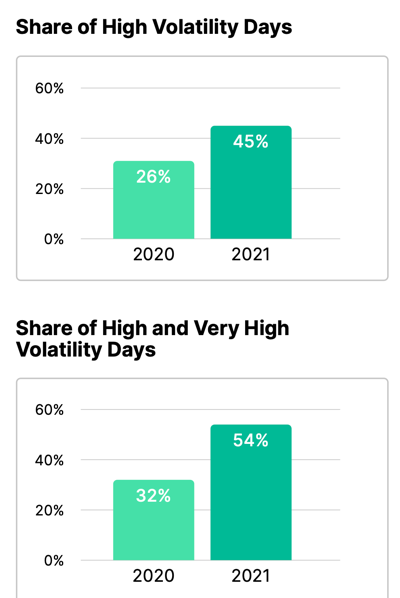

Per Semrush data I pulled, there was a 58% increase in the number of days that reflected high-rank volatility in 2021 as compared to 2020. Similarly, there was a 59% increase in the number of days that reflected either high or very high levels of rank volatility:

Simply put, there is a significant increase in the number of instances that reflect elevated levels of rank volatility. After studying these trends and looking at the ranking patterns, I believe the aforementioned rank reversals are the cause. Meaning, a large portion of the increased instances in rank volatility are coming from what I believe to be machine learning continually recalibrating in “real-time,” thereby producing unprecedented levels of rank reversals.

Supporting this is the fact (that along with the increased instances of rank volatility) we did not see increases in how drastic the rank movement is. Meaning, there are more instances of rank volatility but the degree of volatility did not increase.

In fact, there was a decrease in how dramatic the average rank movement was in 2021 relative to 2020!

Why? Again, I chalk this up to the recalibration of machine learning properties and their “real-time” impact on rankings. In other words, we’re starting to see more micro-movements that align with the natural evolution of Google’s machine-learning properties.

When a machine learning property is refined as its intake/learning advances, you’re unlikely to see enormous swings in the rankings. Rather, you will see a refinement in the rankings that align with refinement in the machine learning itself.

Hence, the rank movement we’re seeing, as a rule, is far more constant yet not as drastic.

The final step towards continuous real-time algorithm updates

While much of the ranking movement that occurs is continuous in that it is not dependent on specific algorithmic refreshes, we’re not fully there yet. As I mentioned, much of the rank volatility is a series of reversing rank positions. Changes to these ranking patterns, again, are often not solidified until the rollout of an official Google update, most commonly, an official core algorithm update.

Until the longer-lasting ranking patterns are set without the need to “press the button” we don’t have a full-on continuous or “real-time” Google algorithm.

However, I have to wonder if the trend is not heading toward that. For starters, Google’s Helpful Content Update (HCU) does function in real-time.

Per Google:

“Our classifier for this update runs continuously, allowing it to monitor newly-launched sites and existing ones. As it determines that the unhelpful content has not returned in the long-term, the classification will no longer apply.”

How is this so? The same as what we’ve been saying all along here – Google has allowed its machine learning to have the autonomy it would need to be “real-time” or as Google calls it, “continuous”:

“This classifier process is entirely automated, using a machine-learning model.”

For the record, continuous does not mean ever-changing. In the case of the HCU, there’s a logical validation period before restoration. Should we ever see a “truly” continuous real-time algorithm, this may apply in various ways as well. I don’t want to let on that the second you make a change to a page, there will be a ranking response should we ever see a “real-time” algorithm.

At the same time, the “traditional” officially “button-pushed” algorithm update has become less impactful over time. In a study I conducted back in late 2021, I noticed that Semrush data indicated that since 2018’s Medic Update, the core updates being released were becoming significantly less impactful.

Data indicates that Google’s core updates are presenting less rank volatility overall as time goes on

Subsequently, this trend has continued. Per my analysis of the September 2022 Core Update, there was a noticeable drop-off in the volatility seen relative to the May 2022 Core Update.

Rank volatility change was far less dramatic during the September 2022 Core Update relative to the May 2022 Core Update

It’s a dual convergence. Google’s core update releases seem to be less impactful overall (obviously, individual sites can get slammed just as hard) while at the same time its latest update (the HCU) is continuous.

To me, it all points towards Google looking to abandon the traditional algorithm update release model in favor of a more continuous construct. (Further evidence could be in how the release of official updates has changed. If you look back at the various outlets covering these updates, the data will show you that the roll-out now tends to be slower with fewer days of increased volatility and, again, with less overall impact).

The question is, why would Google want to go to a more continuous real-time model?

Why a continuous real-time google algorithm is beneficial

A real-time continuous algorithm? Why would Google want that? It’s pretty simple, I think. Having an update that continuously refreshes rankings to reward the appropriate pages and sites is a win for Google (again, I don’t mean instant content revision or optimization resulting in instant rank change).

Which is more beneficial to Google’s users? A continuous-like updating of the best results or periodic updates that can take months to present change?

The idea of Google continuously analyzing and updating in a more real-time scenario is simply better for users. How does it help a user looking for the best result to have rankings that reset periodically with each new iteration of an official algorithm update?

Wouldn’t it be better for users if a site, upon seeing its rankings slip, made changes that resulted in some great content, and instead of waiting months to have it rank well, users could access it on the SERP far sooner?

Continuous algorithmic implementation means that Google can get better content in front of users far faster.

It’s also better for websites. Do you really enjoy implementing a change in response to ranking loss and then having to wait perhaps months for restoration?

Also, the fact that Google would so heavily rely on machine learning and trust the adjustments it was making only happens if Google is confident in its ability to understand content, relevancy, authority, etc. SEOs and site owners should want this. It means that Google could rely less on secondary signals and more directly on the primary commodity, content and its relevance, trustworthiness, etc.

Google being able to more directly assess content, pages, and domains overall is healthy for the web. It also opens the door for niche sites and sites that are not massive super-authorities (think the Amazons and WebMDs of the world).

Google’s better understanding of content creates more parity. Google moving towards a more real-time model would be a manifestation of that better understanding.

A new way of thinking about google updates

A continuous real-time algorithm would intrinsically change the way we would have to think about Google updates. It would, to a greater or lesser extent, make tracking updates as we now know them essentially obsolete. It would change the way we look at SEO weather tools in that, instead of looking for specific moments of increased rank volatility, we’d pay more attention to overall trends over an extended period of time.

Based on the ranking trends we already discussed, I’d argue that, to a certain extent, that time has already come. We’re already living in an environment where rankings fluctuate far more than they used to and to an extent has redefined what stable rankings mean in many situations.

To both conclude and put things simply, edging closer to a continuous real-time algorithm is part and parcel of a new era in ranking organically on Google’s SERP.

Mordy Oberstein is Head of SEO Branding at Wix. Mordy can be found on Twitter @MordyOberstein.

Subscribe to the Search Engine Watch newsletter for insights on SEO, the search landscape, search marketing, digital marketing, leadership, podcasts, and more.

Join the conversation with us on LinkedIn and Twitter.