Taking control of a site’s technical SEO is both an art and a science. Take it from me — a content strategist guy at heart — technical SEO requires a balance of knowledge, diligence, and grit to be proficient. And for many, it can feel both daunting and complicated undertaking such technical matters.

But as code-heavy and cumbersome as technical SEO may seem, grasping its core concepts are closely within reach for most search marketers. Yes, it helps to have HTML chops or a developer on hand to help implement scripts and such. However the idea of delivering top-tier technical SEO services shouldn’t feel as intimidating as it is for most agencies and consultants.

To help dial-in the technical side of your SEO services, I’ve shared five places to start. These steps reflect the 80/20 of technical SEO, and much of what I have adopted from my code-savvy colleagues over the past decade.

1. Verify Google Analytics, Tag Manager, and Search Console; define conversions

If you maintain any ongoing SEO engagements, it’s critical to set up Google Analytics or an equally sufficient web analytics platform. Additionally, establishing Google Tag Manager and Google Search Console will provide you with further technical SEO capabilities and information about a site’s health.

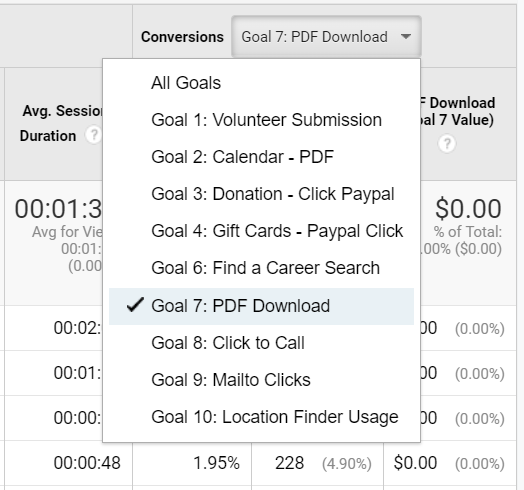

Beyond just verifying a site on these platforms, you’ll want to define some KPI’s and points of conversion. This may be as simple as tracking organic traffic and form submissions, or as advanced as setting-up five different conversion goals, such as form submissions, store purchases, PDF downloads, Facebook follows, email sign-ups, etc. In short, without any form of conversion tracking in place, you’re essentially going in blind.

Determining how you measure a site’s success is essential to delivering quality SEO services. From a technical standpoint, both Google Search Console and Analytics can provide critical insights to help you make ongoing improvements. These include crawl errors, duplicate meta data, toxic links, bounce pages, and drop-offs, to name a few.

2. Implement structured data markup

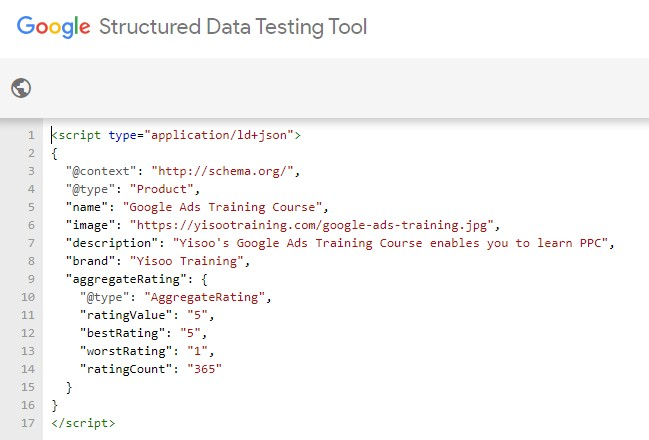

Implementing structured data markup has become an integral element to technical SEO. Having been a topic of focus for Google in recent years, more and more search marketers are embracing ways to employ structured data markup, or Schema, for their clients. In turn, many CMS platforms are now equipped with simple plugins and developer capabilities to implement Schema.

In essence, Schema is a unique form of markup that was developed to help webmasters better communicate a site’s content to search engines. By tagging certain elements of page’s content with Schema markup (i.e. Reviews, Aggregate Rating, Business Location, Person, etc.,) you help Google and other search engines better interpret and display such content to users.

With this markup in place, your site’s search visibility can improve with features like rich snippets, expanded meta descriptions, and other enhanced listings that may offer a competitive advantage. Within Google Search Console, not only can use a handy validation tool to help assess a site’s markup, but this platform will also log any errors it finds regarding structured data.

3. Regularly assess link toxicity

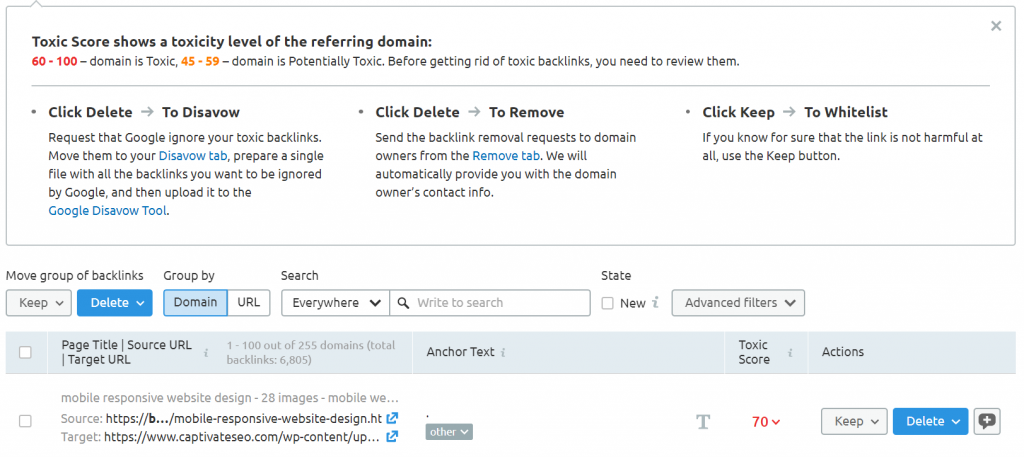

It should be no secret by now that poor quality links pointing to a site can hinder its ability to rank. Even more so, a site that has blatantly built links manually using keyword-stuffed anchor text is at high risk of being deindexed, or removed from Google entirely.

If you just flashed back 10 years to a time when you built a few (hundred?) sketchy links to your site, then consider assessing the site’s link toxicity. Toxic links coming from spammy sources can really ruin your credibility as a trusted site. As such, it’s important to identify and to disavow any links that may be hindering your rankings.

[Not only does the Backlink Audit Tool in SEMRush make it easy to pinpoint potentially toxic links, but also to take the necessary measures to have certain links removed or disavowed.]

If there’s one SEO variable that’s sometimes out of your control, it’s backlinks. New, spammy links can arise out of nowhere, making you ponder existential questions about the Internet. Regularly checking-in with a site’s backlinks is a critical diligence in maintaining a healthy site for your SEO clients.

4. Consistently monitor site health, speed, and performance

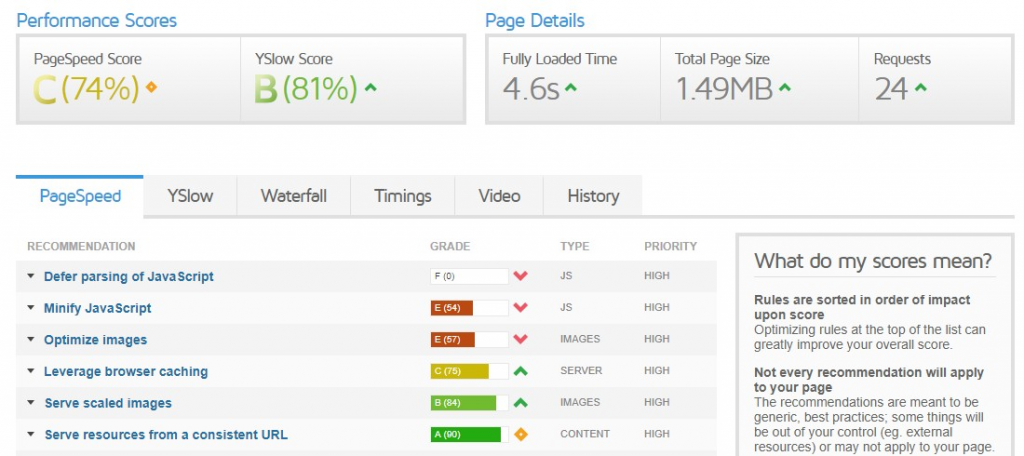

An industry standard tool to efficiently pinpoint technical bottlenecks for a site is GTmetrix. With this tool, you can discover key insights about a site’s speed, health, and overall performance, along with actionable recommendations on how to improve such issues.

No doubt, site speed has become a noteworthy ranking factor. It reflects Google’s mission to serve search users with the best experience possible. As such, fast-loading sites are rewarded, and slow-loading sites will likely fail to realize their full SEO potential.

In addition to GTmetrix, a couple additional tools that help improve a site’s speed and performance are Google PageSpeed Insights and Web.Dev. Similar to the recommendations offered by GTmetrix and SEMRush, these tools deliver easy-to-digest guidance backed by in-depth analysis across a number of variables.

The pagespeed improvements provided by these tools can range from compressing images to minimizing redirects and server requests. In other words, some developer experience can be helpful here.

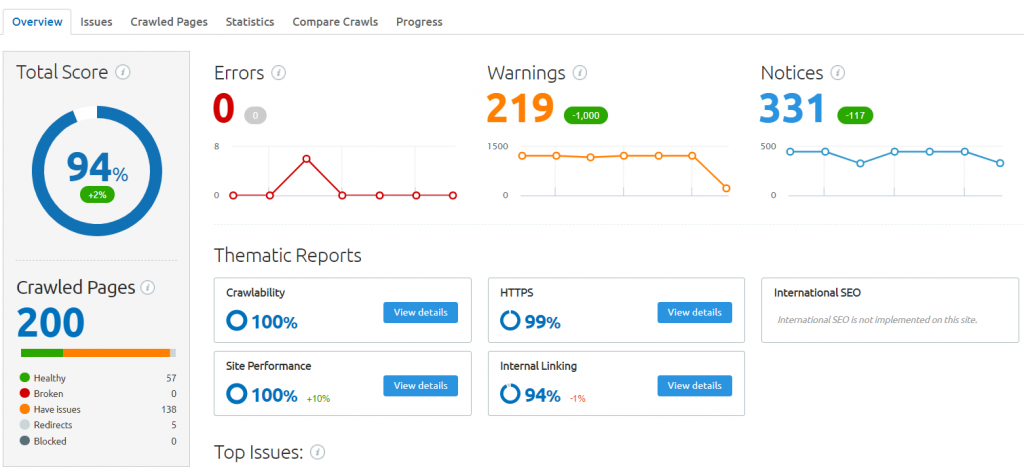

A last core aspect of maintaining optimal site health is keeping crawl errors at a bare minimum. While actually quite simple to monitor, regularly fixing 404 errors and correcting crawl optimization issues can help level-up your technical SEO services. These capabilities are available in the Site Audit Tool from SEMRush.

[The intuitive breakdown of the Site Audit Tool’s crawl report makes fixing errors a seamless process. Users can easily find broken links, error pages, inadequate titles and meta data, and other specifics to improve site health and performance.]

5. Canonicalize pages and audit robots.txt

If there’s one issue that’s virtually unavoidable, it’s discovering multiple versions of the same page, or duplicate content. As a rather hysterical example, I once came across a site with five iterations of the same “about us” page:

- https://site.com/about-us/

- https://www.site.com/about-us/

- https://www.site.com/about-us

- https://site.com/about-us

- http://www.site.com/about-us

To a search engine, the above looks like five separate pages, all with the exact same content. This then causes confusion, or even worse, makes the site appear spammy or shallow with so much duplicate content. The fix for this is canonicalization.

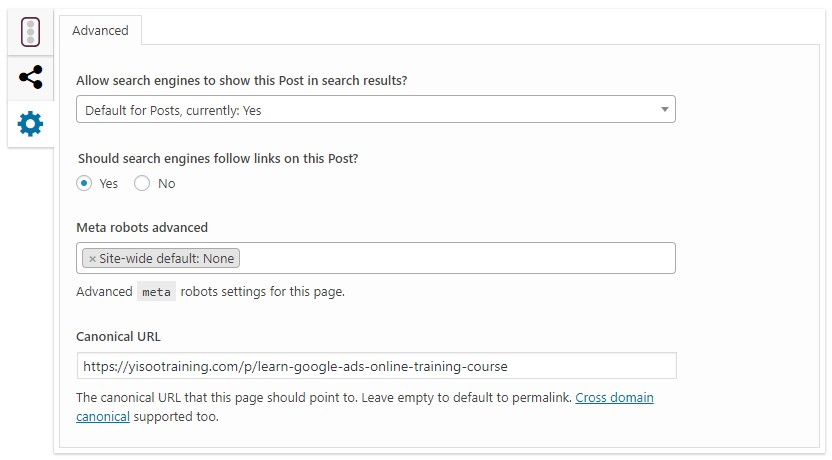

Because canonical tags and duplicate content have been major topics of discussion, most plugins and CMS integrations are equipped with canonicalization capabilities to help keep your SEO dialed-in.

[In this figure, the highly-popular Yoast SEO plugin for WordPress has Canonical URL feature found under the gear icon tab. This simple functionality makes it easy to define the preferred, canonical URL for a given page.]

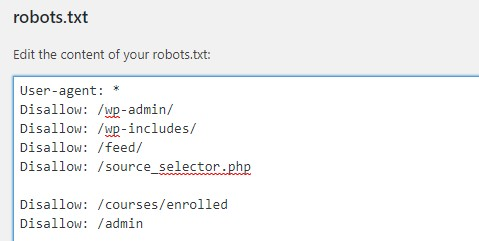

Similarly, the robots.txt file is a communication tool designed to specify which areas of a website should not be processed or crawled. Here, certain URLs can be disallowed, preventing search engines from crawling and indexing them. Because the Robots.txt file is often updated over time, certain directories or content on a site can be disallowed for crawl and indexation. In turn, it’s wise to audit a site’s Robots.txt file to ensure it aligns with your SEO objectives and to prevent any future conflicts from arising.

Lastly, keep in mind that not all search engine crawlers are created equal. There’s a good chance these pages would still be crawled, but it is unlikely they would be indexed. If you have URLs listed as ‘do not index’ in the robots.txt file, you can rest easy knowing anything in those URLs will not be counted as shallow or duplicate content when the search engine takes measure of your site.

Tyler Tafelsky is a Senior SEO Specialist at Captivate Search Marketing based in Atlanta, Georgia. Having been in the industry since 2009, Tyler offers vast experience in the search marketing profession, including technical SEO, content strategy, and PPC advertising.

Related reading

“We know Amazon and Google are big, but in reality many of us still underestimate them.” Jumpshot’s Head of Insights shares data on ecommerce market share.

Page speed is one of the most vital focus areas for customer experience today. Benefits, tools, and tips shared to improve your page speed shared.

Tactics to test within Google Ads if you want to expand your digital advertising strategy beyond keyword targeting, without venturing beyond Google.